Containerized Science

An introduction to how BRIC is using software containerization in the service of science.

Two of the challenges I’ve encountered creating research software for the past two decades typically occur at the beginning and the end of a study: marshaling the necessary prerequisites for a new system, and decommissioning the system at the end of the study, with an eye towards potential requests for data or some functionality after the end.

In my past life as the Chief Developer at Audacious Software, I discovered that institutions (and their IT departments) are often like snowflakes: no two are exactly the same. In one institution, I may have a lengthy form to fill out to request server resources, and then I’m handed the keys to a new server to set up as I wish (in the form of a local account with “sudo” access) . At another institution, I may be given a folder to upload my files, but the local IT department reserves the right to setup and install software packages (for very legitimate reasons). And finally for yet another institution, I may end up hosting their study on my own infrastructure, typically in the Amazon or Azure clouds.

I’ve been building and setting up servers since the mid-1990s using the free CDs I received as part of a fat “Learn Linux” book that may have included early RedHat, Debian, or Slackware distributions. (I once even went way back and installed Minix on a spare 286 PC.) For new projects, navigating these initial hurdles has never been much of an issue for the first instance a new research software system, but I discovered that sharing that software wasn’t usually that simple as soon as we crossed institutional borders, with different standards (RedHat Enterprise Linux/RHEL vs. Ubuntu), security policies (local IT provides SSL certificates vs. using Let’s Encrypt), and access methods (SSH over a VPN vs. access provided through a dedicated console like BeyondTrust).

These are not new challenges, and I always tried to build systems with as few assumptions about the underlying platform as possible. If you look at my server work, that is typically manifested as Python systems (typically Django) that persist data in classical relational database management systems (Postgres and SQLite most often) using an object-relational mapping that allows me to swap out the underlying database software without an impact on the system’s features and functionality. This has worked well for me, even to the point where I’ve able to get systems built for Linux running on platforms like Windows.

However, moving Software X from Institution A to Institution B always involved a translational exercise. For example, in studies that featured some form of geographic component, one of my constant headaches was getting all of the underlying dependencies installed that would allow me to use GeoDjango’s GIS data types for collecting, querying, and analyzing location data. This is a pretty straightforward exercise on a platform like Ubuntu, but can be a bit trickier to nail down in an RHEL environment where local IT needs a list of RPM packages to install themselves. On Windows, the difficulty is an order of magnitude larger, as you have to find the right combination of linear algebra libraries, GIS libraries, and a version of Postgres that will play nicely with each other and the version of Python you’ve installed locally.

One way to avoid this mess is compile and provide to your institution a virtual machine that they can install on a protected network and use a web proxy to provide communication to the outside world. VMWare has traditionally been the leading product in this space, but given that its new owner Broadcom seems intent on nuking the value of that brand for anyone that isn’t a large rich corporation (plus some nasty security vulnerabilities), I’ve seen attitudes cool towards that approach. So, looking to improve the state of distributing non-trivial software across institutions, we’ve been investing a lot of time in containers.

Containers are light(er)-weight alternatives to full virtual machines where all of the necessary prerequisites and the software itself can be installed into an image intended to be run across a variety of computing environments. Using virtualization technologies built into modern operating systems (Linux, Windows, and MacOS), containers typically avoid the higher resource requirements of emulating another running machine within a host operating system (i.e. virtual machines).

Containers are typically built using minimal bases to reduce disk space and minimize the attack surface from having more software installed than is needed. Containers can be deployed as a single image hosting a single system, or as collections of multiple images working together. Multiple images (a service) are often required because each container is typically designed to only run one program, and more programs are often needed for a functional system (e.g. a web application server and a database).

The name “container” was chosen as a metaphor to the standardized shipping containers that revolutionized global trade. Instead hiring an army of longshoremen to load and unload ships full of merchandise packed into a variety of sizes, loading goods into a large metal box with standard locations for crane hooks, predictable sizes, and all the infrastructure designed for those standard boxes ushered in a world where the port didn’t need to know if you were shipping flat screen televisions or shoes – it was all the same to them. In software containers, the idea is the similar. The host system doesn’t need to know what’s running within the container, it has standard ways of launching them, and is protected by the design of the container engine to prevent one container from interfering with another.

At BRIC, we began exploring this approach as a way to get a developer familiar with Django up and running on a brand-new system without having to learn about GIS libraries, installing databases, or all of that other related complexity. We were able to create a system that used four containers with the Docker Compose tool, commit that to GitHub, and stand up a running copy of the system on local developer systems for work. To be honest, it took a bit of trial and error to get up and running, but we’re well past that point and reaping the benefits now.

To demonstrate how this works, a good example is our Passive Data Kit Django framework. If you visit our GitHub page, there’s about eight printed pages of documentation detailing the prerequisites and steps needed to get a basic Passive Data Kit server up and running.

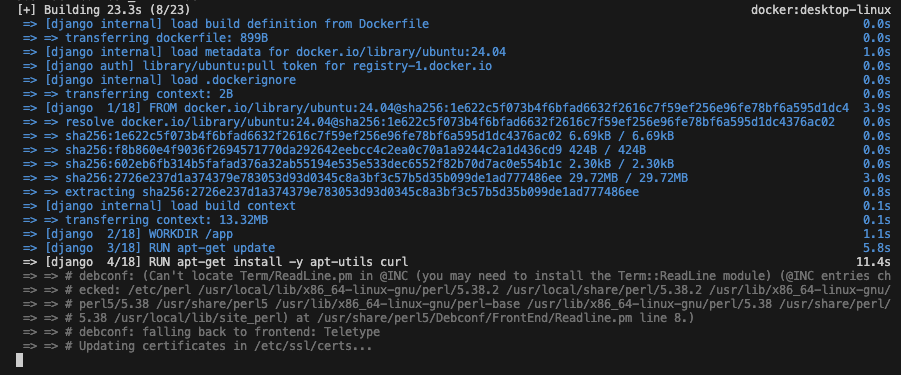

If you already have a container engine installed (Docker Desktop is a good one to get started with) and you just want to kick the tires of Passive Data Kit, you can be up and running with a handful of commands:

git clone https://github.com/audacious-software/PassiveDataKit-Django.git

cd PassiveDataKit-Django/docker

cp template.env .env

docker compose up

As the “docker compose up” command runs, you’ll see on your terminal window all of the steps that the container system is doing to find prerequisites, install them, initialize the database, and so forth.

When it gets to the end and you see that the Nginx container is running, you can access your own local copy of Passive Data Kit through your browser at

You can stop the server by returning to your terminal and issuing a Control-C command to quit the process or stop it using the GUI tools that Docker Desktop provides.

Now, this version that you’ve tested isn’t intended to be used for production deployment – there’s a set of variables that need to be configured in that .env file that you copied. In the Passive Data Kit case, this service actually contains four containers within it: a standard Postgres database (for storing data), a Django web application (for functionality), a CRON container (for running background tasks), and Nginx for serving as the front-end of the system that proxies web requests to and from the Django web application from requests originating outside the Docker container.

I’ll go into more details about how we design our containers, and the lessons we learned in a future post (getting CRON to run well was surprisingly challenging, and not all container services are created equally), but I wanted to wrap up this introduction by letting readers know that this approach has worked so well – from development through deployment – that containers are now our primary mechanism for distributing our research software systems to clients. It’s reduced our deployment times as the only dependency we have to request or install ourselves is typically the Docker container engine and an Internet-facing web server to direct traffic to the appropriate containers. This has been a big productivity win for us internally, and we are looking to share this approach with other research software developers.

In addition to research software developers, containers are a win for the scientists we’re serving.

Just before writing this post, I got off a call with the IT staff at one of our biggest clients. The topic was what to do with a few legacy research systems that were no longer being used. The challenge in a research context is that we can’t just shut them down and tell future interested parties that we’re out of business. (We could, but that is terrible for scientific replicability.) We discussed the best way to back up the databases. Do we just save Postgres dumps intended for a technician to set up a new database in response to a future data request? Do we try and translate the Postgres tables into CSV files, losing the richness of relationships between objects in different tables? Or what?

Container technology provides an intriguing answer – how about we back up the container images and data volumes directly from the server to a secure location, and we can share our data and a running server with a simple “docker compose up” command? That solves a lot of big problems on the replicability front, as the software’s been isolated into a form that should run in a variety of contexts, independently of the original institution’s preferences or policies. It provides interesting options explore research software systems locally without committing to the investment of a server or cloud provider just to answer “will this work for me?”

At BRIC, we’re using containers to improve our development and deployment processes, and we look forward to sharing guides to getting started and the pitfalls to avoid as soon as we find the time to write them. We’re also investigating whether we can serve the scientific community as a trusted repository for research software containers. The idea is that if you follow a set of standards that we set for your container design, we can provide the infrastructure to host “frozen” copies of images and data volumes to assist the research community in much the same way that Hugging Face is serving the artificial intelligence community by hosting models and datasets.

Imagine a world where you read the latest paper in your field and the authors have created something interesting in software. And rather than having to chase down a copy of the software, finding someone to install it locally, and then finally getting to the point where you can login and use it, instead, you could skip to that final step with two commands:

docker compose pull cool-new-research-software

docker compose up cool-new-research-software

That’s the world we’re working on building. We have all the pieces – we just need to arrange them into the right places now.